Events are generated by various Kubernetes components, such as the scheduler, kubelet, controllers, etc., to capture information related to pods, nodes, and other resources.

Events will have a status record if a pod is being scheduled, a container is crashing, or a node is running out of disk space.

Think of Kubernetes Events as a chronicle of your cluster’s activities. They offer a centralized view of all the important activities for various cluster resources.

Table of Contents

- A few essential learnings about K8S Events

- Practical use cases of kubectl events

- Conclusion

- References

A few essential learnings about K8S Events

kubectl get events VS. kubectl events

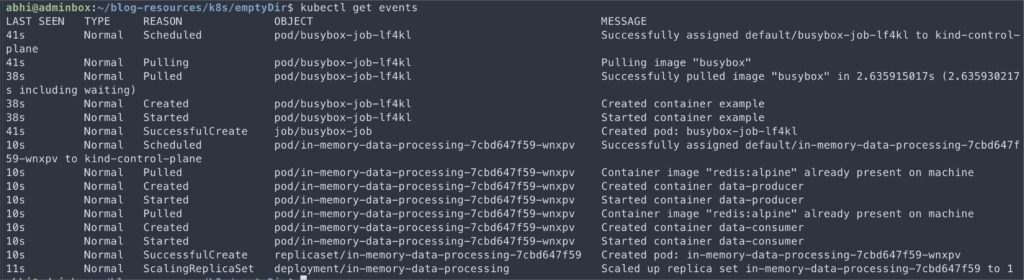

You might be familiar with kubectl get events command. This gives all the events from the current active namespace.

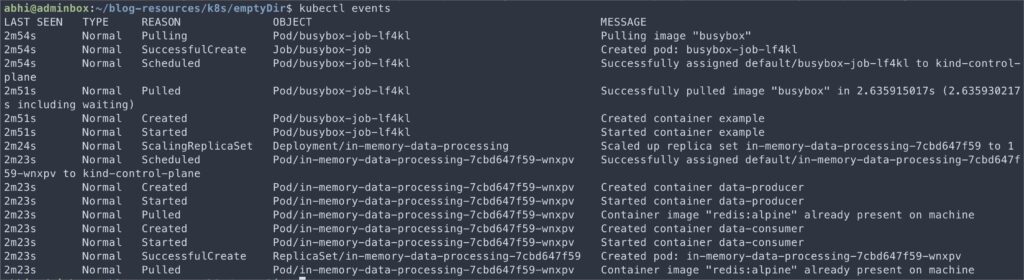

Now, run kubectl events. We get similar output.

However, did you spot the difference? Look at the first LAST SEEN column. kubectl events by default, sorts the events by the time they occurred, kubectl get events does not.

For kubectl get events, we have to add --sort-by='.metadata.creationTimestamp’ option to get the sorted events.

kubectl get events --sort-by='.metadata.creationTimestamp'So why are there two different commands to get the events? Let’s find out in the next section.

tl;dr — There are two versions for the Events API.

Two different versions

Kubernetes v1.19 introduced the new events.k8s.io/v1 API version to provide a more structured and expressive format for Events. This new version brings some cool features and enhancements to the table.

On the other hand, the v1 API version is the predesesor of new Events API. It’s been around since the early days of Kubernetes and is still supported for backward compatibility. It gets the job done but lacks some of the fancy features of its younger sibling.

You can learn more about the implementations of both using kubectl explain.

> kubectl explain events --api-version v1

> kubectl explain events --api-version events.k8s.io/v1You’ll notice that the events.k8s.io/v1 version has additional fields and nested structures that provide more granular event information. For example:

- The

regardingfield inevents.k8s.io/v1specifies the Kubernetes object to which the Event is related, such as a Pod, Node, or Service. In thev1version, this information is captured in theinvolvedObjectfield. - The

notefield inevents.k8s.io/v1provides a human-readable description of the Event, offering more context and details about what happened. Thev1version uses themessagefield for a similar purpose. - The

reportingControllerandreportingInstancefields inevents.k8s.io/v1provide more explicit information about the controller responsible for emitting the Event. In thev1version, this information is captured in thesource.componentfield. deprecatedCount,deprecatedLastTimestamp,deprecatedSource: These fields inevents.k8s.io/v1are deprecated fromv1and are present to ensure backward compatibility with thev1Event type. They correspond to thecount,lastTimestamp, andsourcefields in thev1version, respectively.

Temporary storage in etcd

By default, Events are retained in etcd for just 1 hour. After that, they are automatically removed to prevent etcd from getting cluttered with old and irrelevant data.

However, while configuring kube-apiserver , you can set --event-ttlflag to the required duration to retain events longer.

If you need to maintain events for longer than 1hr, a better option is to implement solutions like kspan, kube-events, or kubernetes-event-exporterto store events in external stores.

Event occurrence count

Take a look at the following example:

Type Reason Age From Message

- - - - - - - - - - - - -

Warning BackOff 3m5s (x7 over 3m) kubelet Back-off restarting failed containerAt first glance, you might think that Kubernetes is storing every single occurrence of this Event and intelligently aggregating them to show the count and timestamps. However, that’s only partially accurate.

In reality, Kubernetes doesn’t store each event occurrence. Instead, it keeps track of the event metadata, including the first occurrence timestamp, the last occurrence timestamp, and the total count of occurrences.

In the example above, Kubernetes is telling us that the BackOff Event happened a total of 7 times (hence the x7 ). The Event occurred 3 minutes ago, and the most recent occurrence was around 3 minutes ago.

Event Freshness and Time-to-Live (TTL)

How does this event aggregation work with Kubernetes’ event retention policy? By default, Kubernetes forgets events after one hour. So, how can we see an event that says it happened multiple times over a more extended period?

The answer lies in how Kubernetes refreshes the Event’s time-to-live (TTL) each time it occurs. When an event is first recorded, it is given a TTL of one hour. However, if the same Event occurs again within that hour, Kubernetes updates the last occurrence timestamp and resets the TTL to one hour from that point.

Let’s consider an example scenario:

- An event first occurs at 10:00 AM and is recorded with a count of 1 and a TTL of one hour.

- The same Event occurs again at 10:30 AM. Kubernetes updates the Event’s last occurrence timestamp to 10:30 AM, increments the count to 2, and resets the TTL to one hour from 10:30 AM.

- The Event will continue every 30 minutes for the next 10 hours.

- In this scenario, if you check the events after 10 hours, you’ll see an event with a count of 21 (1 initial occurrence + 20 occurrences every 30 minutes) and a timestamp range spanning the last 10 hours.

Events as an object

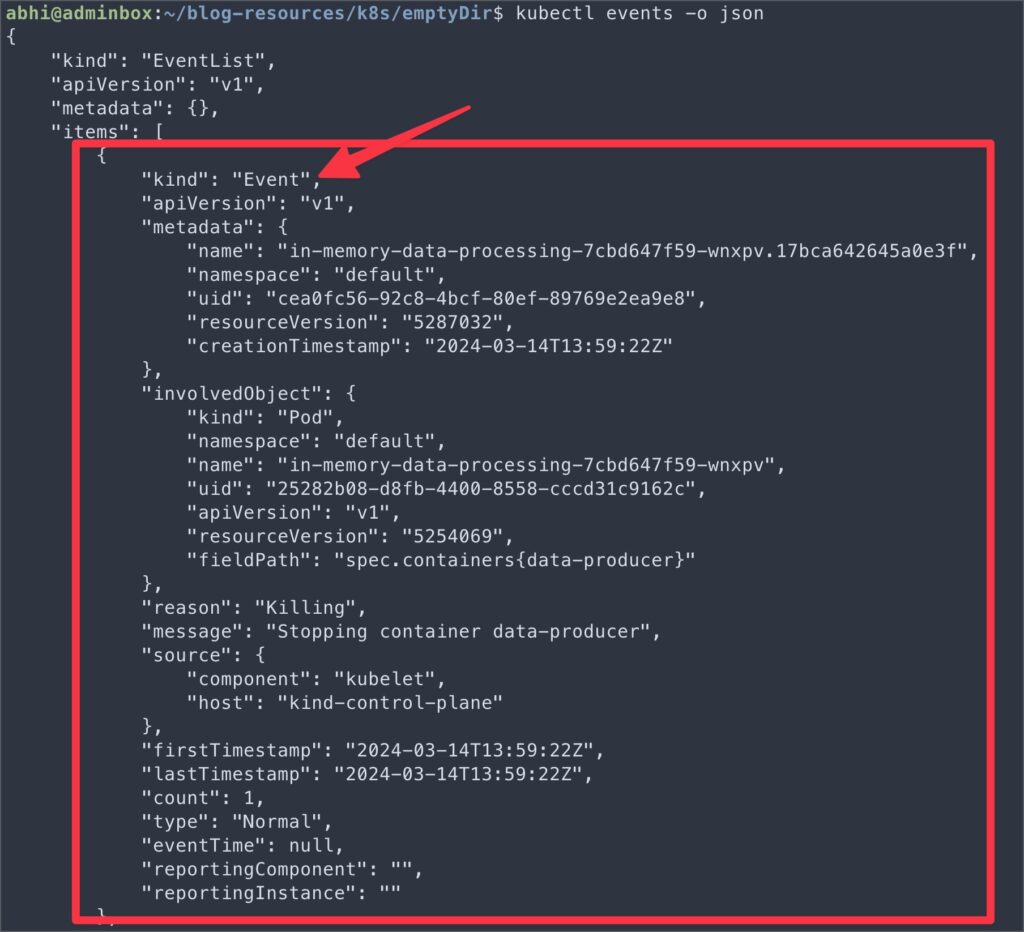

Like any other Kubernetes object, an Event is an object, too.

So, it has a specific structure and fields that define its characteristics. You will be familiar with this object format.

Let’s take a closer look at the key components of an Event object:

kubectl events -o json

Practical use cases of kubectl events

List recent events in a namespace

One of the most basic and frequent uses of Events is to get an overview of recent activities in a specific namespace. You can use the following command to list the most recent Events in a namespace:

kubectl events --namespace <my-namespace>

OR

kubectl events -n <my-namespace>This command will display a chronological list of Events that have occurred in the my-namespace namespace. It provides a quick snapshot of what’s been happening, including resource creations, deletions, and any issues encountered.

Watch events for a specific resource

When troubleshooting or monitoring a specific resource, such as a pod or a deployment, watching the Events related to that resource in real-time can be helpful. You can use the--watch flag with the kubectl events command to stream Events for a specific resource continuously:

kubectl events --watch --for Pod/<pod-name>

OR

kubectl events --watch --for Deployment/<object-name>

OR

kubectl events --watch --for ReplicaSet/<object-name> --namespace <my-namespace>List events of specific types

Kubernetes Events are categorized into two types, Normal and Warning.

You can filter Events based on their type to focus on specific categories of events. For example, to list only the Warning Events in a namespace, you can use the following command:

kubectl events --types=Warning --namespace <my-namespace>

OR

kubectl events --types=Warning --for Deployment/<object-name> --namespace <my-namespace>Monitoring events related to security and access control

Events can be valuable for monitoring your cluster’s security-related activities and access control. For example, you can use Events to track authentication failures, unauthorized access attempts, or changes to role-based access control (RBAC) policies.

kubectl events --types=Warning -ojson -n <my-namespace> | jq '.items[] | select(.reason == "Failed")'You can also use build-in kubectl events --template flag instead of jq.

Investigating node issues

Events can also help investigate issues related to Kubernetes nodes. You can use the following command to list Events associated with nodes:

kubectl events --types=Warning -ojson -n <my-namespace> | jq '.items[] | select(.source.host == "<node-name>")'Conclusion

Remember, when something goes wrong in your Kubernetes cluster, you don’t always need to rely on complex observability tools to gather information for debugging.

By understanding the simple concept of Events and their behavior, you can quickly pinpoint issues, monitor resource health, and keep a watchful eye on security-related activities.

So, the next time you encounter a problem, consider exploring Events as a first step in your troubleshooting process — it might save you time and effort to resolve the issue.

References

- https://github.com/kubernetes/enhancements/blob/master/keps/sig-instrumentation/383-new-event-api-ga-graduation/README.md

- The Soul of a New Command: Adding ‘Events’ to kubectl – Bryan Boreham, Grafana Labs

🙏 I am grateful for your time and attention all the way through!

Let me know your thoughts/ questions in the comments below.

If this guide sparked a new idea,

a question, or desire to collaborate,

I’d love to hear from you:

🔗 Upwork

Till we meet again, keep making waves.🌊 🚀