As a container orchestration tool, Kubernetes (K8S) ensures your applications are always running. However, applications can fail due to many reasons.

During such failures, Kubernetes tries to self-heal the application by restarting it, thanks to Pod restart policies.

We can take the term self-heal with a pinch of salt, as K8S can only attempt to restart the application; it can’t solve the application issue/ error. But where it’s a transient error that a simple restart can fix, this feature is handy.

In this article, we will practically understand how the restart policy works and how its values behave in certain conditions.

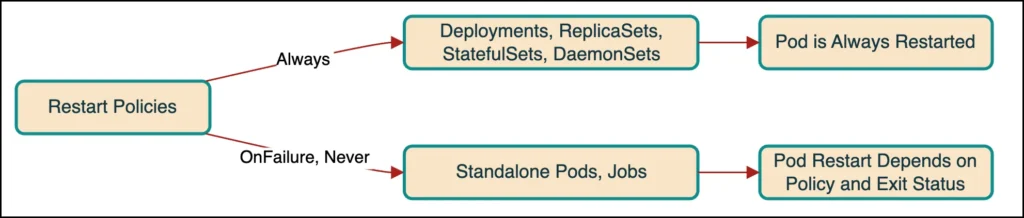

Here’s a quick summary for you.

Table of Contents

Resource Repo

To follow hands-on exercises, clone this git repo and change the directory to restartPolicy.

> git clone https://github.com/decisivedevops/blog-resources.git

> cd blog-resources/k8s/restartPolicyAlways

restartPolicy: Always ensures that a container is restarted whenever it exits, regardless of the exit status.

Alwaysis the default restart policy for the pods created by controllers like Deployment, ReplicaSet, StatefulSet, and DaemonSets.

Let’s test it practically.

- Apply the

deployment.yml

> kubectl apply -f deployment.yml

deployment.apps/busybox-deployment created- This simple busybox deployment starts a pod, runs a while loop, sleeps for 5 seconds, and exits with a non-zero exit code.

- Get the pod status.

> kubectl get pod

NAME READY STATUS RESTARTS AGE

busybox-deployment-7c5cfcf9b8-kp5d6 0/1 Error 2 (27s ago) 40- It restarts right after the loop ends and exits.

- Let’s check the pod restart policy value.

> kubectl get po -l app=busybox -o yaml | grep -i restartTwo points to note here.

- We have not explicitly set the

restartPolicyspec on the deployment.Alwaysis the default value. - The container exited with a non-zero status code, so you might think about what will happen if the container actually does its job and exits successfully, i.e., with

0exit code.

Let’s try this.

- Update the while loop in

deployment.ymlto exit with the status code0.

- 'while true; do echo "Hello Kubernetes!"; sleep 5; exit 0; done'- Re-apply the deployment and observe the pod restart count.

> kubectl apply -f deployment.yml

deployment.apps/busybox-deployment configured

> kubectl get po -l app=busybox

NAME READY STATUS RESTARTS AGE

busybox-deployment-7c5cfcf9b8-d5dzj 0/1 Completed 1 (7s ago) 14s

# you can see a differance in the STAUTS column.

# with non-zero exit code, it will be Error.

# with zero exit code, it will be Completed.- However, it’s still restarting.

Wait, our pod’s work was completed successfully and exited with a proper exit status, so why is it in a restart loop?

This restart behavior is inherent to the working of Deployments to ensure that a specified number of pods are always running.

But what if we want to run a pod, complete the task, and exit? That’s where the other values of restartPolicy are helpful.

But there’s a catch with those values.

OnFailure and Never

As names suggest,

restartPolicy: OnFailureensures that a pod is restarted only when it fails OR exits with non-zero exit stats.restartPolicy: Neverdoes not restart the pod once it fails or exits.

Demo time.

- Update the

deployment.ymlto includerestartPolicyasOnFailure.

spec:

containers:

- name: busybox

image: busybox

args:

- /bin/sh

- -c

- 'while true; do echo "Hello Kubernetes!"; sleep 5; exit 0; done'

restartPolicy: OnFailure- Re-apply the deployment.

> kubectl apply -f deployment.yml

The Deployment "busybox-deployment" is invalid: spec.template.spec.restartPolicy: Unsupported value: "OnFailure": supported values: "Always"- Typo maybe? It doesn’t look like it as it says

supported values: “Always”. What happened to the other two then? - Turns out, values

OnFailureandNeverare only applicable when,

– The pod is launched on its own (i.e., without controller like Deployment, ReplicaSet, StatefulSet, and DaemonSets), and

– When a pod is started by a Job (including CronJob). - If you want to dive into the conversation about whys and hows about this, there is a long-running discussion thread on Github.

To get our task done of starting the pod, getting it to complete the job, and exiting, and not restarting, we need a Job controller.

job.ymldefines a simple Job that starts a pod, prints out a string, and exits. It hasrestartPolicyasOnFailure.- Apply

job.yml.

> kubectl apply -f job.yml

job.batch/busybox-job created- Check the pod status.

> kubectl get pod --field-selector=status.phase=Succeeded

NAME READY STATUS RESTARTS AGE

busybox-job-hn69d 0/1 Completed 0 1m- Now, it’s not restarted after completion.

You can test the below configurations to get further idea about how restartPolicy works for Job.

- Update the

job.ymlto exit the pod with a non-zero status code.

command: ["/bin/sh", "-c", "echo Hello Kubernetes! && exit 1"]

> kubectl delete -f job.yml

> kubectl apply -f job.yml

# ------

# pod should be in restart loop

> kubectl get pod2. Update the restartPolicy to Never and check the pod status.

Conclusion

A few people pointed out on this thread that having values Never and OnFailure is helpful in cases where we need to debug a pod that is having issues only in a Kubernetes environment.

Also, one point ( this isn’t very clear) is the official K8S documentation does not clearly state the working of these different values yet.

Until we have some clarification on these values from the official K8S team, here are a few key takeaways.

- Restart Policies Define Pod Resilience: The

Alwaysrestart policy, the default for Pods managed by deployments and other controllers ensures continuous availability by restarting containers regardless of their exit status. This policy is required for applications that must be available at all times. - Context-Specific Policy Usage: While

Alwaysis suitable for ongoing applications,OnFailureandNeverrestart policies are specifically helpful for standalone Pods (a rare case) or those managed by Jobs and CronJobs, catering to batch jobs and tasks that should not restart once completed. - Deployment Limitations and Job Flexibility: Deployments enforce the

Alwayspolicy to maintain service availability, highlighting a limitation for use cases requiring no restarts. Jobs offer flexibility, allowing forOnFailureandNeverpolicies to ensure tasks run to completion as intended without unnecessary restarts.

🙏 Thanks for your time and attention all the way through!

Let me know your thoughts/ questions in the comments below.

If this guide sparked a new idea,

a question, or desire to collaborate,

I’d love to hear from you:

🔗 Upwork

Till we meet again, keep making waves.🌊 🚀

Leave a Reply