Most applications running in containers will output logs to stdout/stderr. When the application fails, these logs will be the first point to start debugging.

In Kubernetes, podterminationMessagePolicy configures how these termination logs are captured.

Let’s understand how terminationMessagePolicy works and how to configure it.

Here’s a quick summary for you.

Table of Contents

File

When terminationMessagePolicy is set to File, the application can write termination logs to a default file location /dev/termination-log .

Let’s test this using a simple Python application.

Clone this git repo and change the directory to terminationMessagePolicy a folder.

> git clone https://github.com/decisivedevops/blog-resources.git

> cd blog-resources/k8s/terminationMessagePolicyfrequent_crash.py is a Python script that runs in a continuous loop and compares a randomly generated value to a fixed value (0.1 in this case), and if the random value is less than the set value, the script raises a value error and exits. It also writes the termination logs to /dev/termination-log by default.

Now, create a containerized application.

> docker build -t decisivedevops/k8s-restless-restarter:v1.0.0 .Go through deployment.yml once. There is no configuration related to terminationMessagePolicy it yet.

Deploy the application.

> kubectl apply -f deployment.ymlOnce the pod is started, let’s go through its logs.

> POD=$(kubectl get pod -l app=frequent-crash -o jsonpath="{.items[0].metadata.name}")

> kubectl logs --follow $POD

Starting the application...

0.8604129141870653

0.8400180957417097

0.030886943534652378

An error occurred, exiting...It will fail once the random value is less than 0.1.

Now, let’s describe the pod and find the configuration for terminationMessagePolicy .

> kubectl get pod $POD -o yamlYou should see two values for this container. These are set by default by Kubernetes.

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

terminationMessagePathconfigures in which file to write logs.

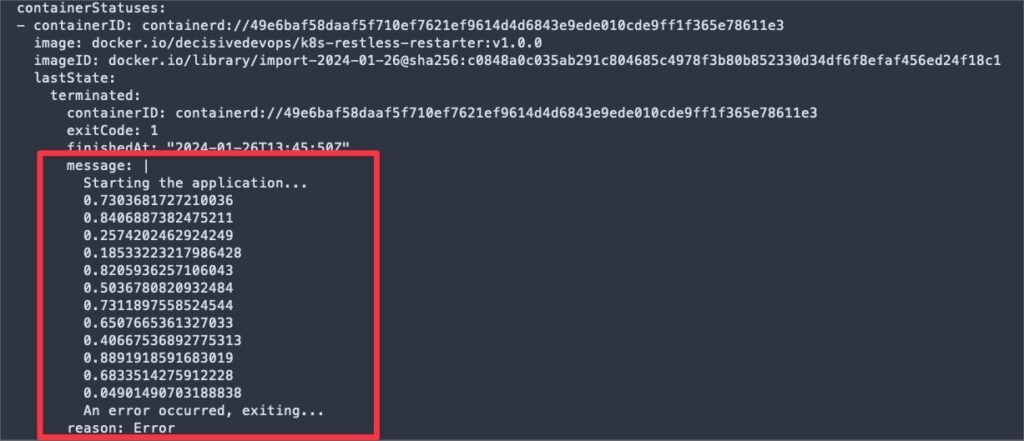

Also, the termination message is under containerStatuses key in the last few lines.

containerStatuses:

- containerID: ****

lastState:

terminated:

message: 'Application error: Data processing error'

reason: Error> kubectl get pod $POD -o go-template='{{range .status.containerStatuses}}{{printf "%s:\n%s\n\n" .name .lastState.terminated.message}}{{end}}'That’s how default values for terminationMessagePolicy and terminationMessagePath work.

Remember, we can only see this termination message because the application that we are running writes these logs to a default file location /dev/termination-log .

This is the function we have for that.

termination_message_file = os.getenv('TERMINATION_MESSAGE_FILE', '/dev/termination-log')

def write_termination_message(message):

with open(termination_message_file, 'w') as file:

file.write(message)Let’s update the value for TERMINATION_MESSAGE_FILE variable and re-run the application.

Add below env var to the deployment.yml and reapply.

containers:

- name: frequent-crash-container

image: decisivedevops/k8s-restless-restarter:v1.0.0

env:

- name: TERMINATION_MESSAGE_FILE

value: /dev/new-termination-log> kubectl apply -f deployment.yml

deployment.apps/frequent-crash configuredOnce the pod is restarted, take a look at the termination message.

> POD=$(kubectl get pod -l app=frequent-crash -o jsonpath="{.items[0].metadata.name}")

> kubectl get pod $POD -o go-template='{{range .status.containerStatuses}}{{printf "%s:\n%s\n\n" .name .lastState.terminated.message}}{{end}}'

frequent-crash-container:

%!s(<nil>)We got %!s(<nil> .

Why? We only updated the script to write termination logs to a new location, but we didn’t update the path for terminationMessagePath .

Reapply the deployment using the configuration below and try to get the termination logs.

containers:

- name: frequent-crash-container

image: decisivedevops/k8s-restless-restarter:v1.0.0

terminationMessagePath: /dev/new-termination-log

env:

- name: TERMINATION_MESSAGE_FILE

value: /dev/new-termination-logIf you are new to K8S and have had no previous encounter with terminationMessagePolicy, you wouldn’t have such log-writing functions in your application.

In such cases, Kubernetes provides a quicker way for you to get these termination logs using terminationMessagePolicy: FallbackToLogsOnError

FallbackToLogsOnError

When terminationMessagePolicy is set to FallbackToLogsOnError ( and if the file at terminationMessagePath is empty ), Kubernetes can retrieve the last few lines of container logs as termination messages.

Let’s test this practically.

Make the below changes to the deployment.yml and reapply.

containers:

- name: frequent-crash-container

image: decisivedevops/k8s-restless-restarter:v1.0.0

# terminationMessagePath: /dev/new-termination-log

terminationMessagePolicy: FallbackToLogsOnError

env:

- name: TERMINATION_MESSAGE_FILE

value: /dev/new-termination-logHere we have,

- Commented

terminationMessagePath, so it goes to the default value, i.e./dev/termination-log - Updated

terminationMessagePolicytoFallbackToLogsOnError TERMINATION_MESSAGE_FILEis still at the non-default path.

Once we apply this new config, here’s what should happen.

- The application fails and writes termination logs to a non-default location.

- Since default

terminationMessagePathis empty, Kubernetes should retrieve container logs and show them undercontainerStatusesfor terminated logs.

> kubectl apply -f deployment.yml

deployment.apps/frequent-crash configured

> POD=$(kubectl get pod -l app=frequent-crash -o jsonpath="{.items[0].metadata.name}")

> kubectl get pod $POD -o yaml

# OR you can use go-template

> kubectl get pod $POD -o go-template='{{range .status.containerStatuses}}{{printf "%s:\n%s\n\n" .name .lastState.terminated.message}}{{end}}'Indeed, we can see application output as termination status logs.

Now you can uncomment the line terminationMessagePath: /dev/new-termination-log , reapply and see a difference under containerStatuses.

That’s how terminationMessagePolicy: FallbackToLogsOnError works.

terminationMessagePolicy Limitations

Be mindful of the below limitations regarding the size of the termination logs.

Conclusion

Since we have container logs anyway, why configure

terminationMessagePolicy?

This was one of the constant questions I asked myself while brainstorming about terminationMessagePolicy .

Here are a few use cases where terminationMessagePolicy is super-useful.

Simplified error diagnostics

When a container fails, you can quickly diagnose the issue using kubectl. This can provide a concise overview of the pod’s state, including its termination message.

You will sift through potentially extensive pod logs if a container does not write a specific termination message.

Configuring terminationMessagePolicy and just getting application failure logs is faster and simpler.

Separating your application and error logs

This use case can be a quicker way to separate regular application working and failure logs. You can easily integrate these two logs into your observability/ log store tools.

Your application can write any failure logs to a particular file, and you can have a side-car container pushing these logs to your log store.

That can be a handy way to debug issues using failure logs.

🙏 Thanks for your time and attention all the way through!

Let me know your thoughts/ questions in the comments below.

If this guide sparked a new idea,

a question, or desire to collaborate,

I’d love to hear from you:

🔗 Upwork

Till we meet again, keep making waves.🌊 🚀

Leave a Reply